Active Listening in the Age of AI: Can Machines Teach Reps to Hear Better?

SAASGROWTHAI

9/30/20256 min read

For CROs, RevOps leaders, and sales managers at B2B SaaS and tech-enabled companies with 10–200 sellers. We focus on practical, revenue-first listening behaviors and AI-enabled workflows — not tool hype or generic platitudes.

We record almost every sales call now. Yet many teams still miss the moments that move deals forward. In complex, multi-threaded buying, reps get limited airtime and even less room for error. Can AI actually improve active listening in sales, or does it just count words? The answer is both simple and powerful: AI can scale the mechanics of listening; humans bring the empathy and judgment that build trust. Together, they turn conversations into pipeline.

At BriskFab, we help revenue leaders operationalize this blend — training reps on listening behaviors, instrumenting RevOps, and using AI to coach and capture buyer language across the funnel.

What active listening means in revenue terms

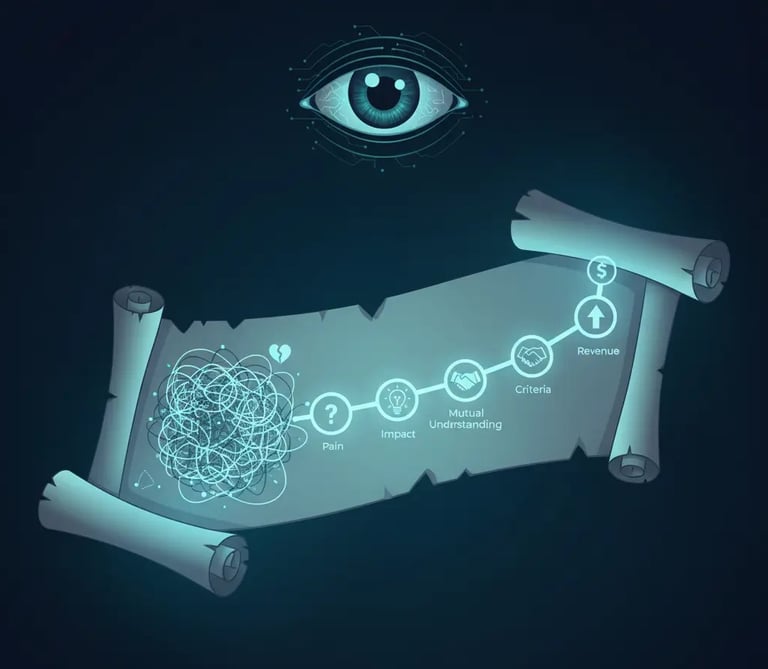

Active listening isn’t silence. It’s a set of visible, coachable behaviors that advance the buyer’s thinking and the deal:

Ask layered questions (pain → impact → priority → criteria → risks)

Paraphrase and label to confirm understanding

Use purposeful silence to invite depth

Capture buyer language verbatim and reuse it in follow-ups and demos

Close with a mutual next step (owner, date, outcome)

Why it works:

Great listening is collaborative and makes the speaker feel smarter — buyers equate that with competence (HBR)

High-quality listening reduces defensiveness and increases openness — crucial in discovery and objection handling (Human Communication Research; EJWOP)

Strategic pauses lead to richer answers — a useful analog for sales discovery (Rowe, 1986) SAGE.

Tie these behaviors to outcomes you track: higher SQO rates, shorter cycles, stronger win rates, and tighter forecasts.

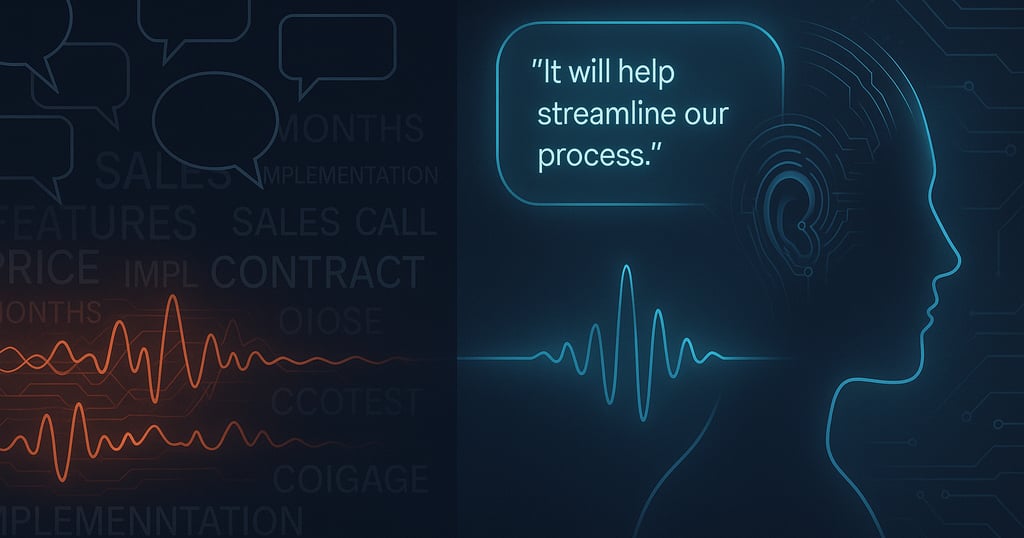

Can AI teach listening? Yes — the mechanics at scale

Conversation intelligence plus large language models (LLMs) can analyze transcripts and audio to coach core listening behaviors consistently, call after call.

What AI can coach today

Text-level

Identify open vs. closed questions and “depth chains”

Flag missed paraphrases; suggest tighter summaries

Extract next steps (owner/date) and alert when they’re missing

Check agenda coverage against your discovery rubric

Pull buyer verbatim phrases for follow-ups and demo storylines

Audio-level (with diarization/timestamps)

Talk-to-listen ratio by role

Interruptions and overlaps

Purposeful silence and response latency

A quick example:

Buyer: “Our ops team spends 10–12 hours a week stitching data for leadership, and it’s creating reporting gaps.”

Rep: “We can automate that. Let me show you a dashboard.”

AI coach: “Paraphrase missing. Try: ‘It sounds like manual stitching costs ops a day a week and erodes leadership’s trust in reporting — did I capture that?’ Then ask: ‘If you got that day back, what would change?’”

This isn’t abstract. In a large field study, a generative AI assistant lifted agent productivity by ~14%, with the biggest gains for less-experienced agents. The mechanism: surfacing “what top performers say next” in real time (NBER). For sales, expect a faster ramp and more consistent discovery.

How it fits in your stack

ASR (e.g., Whisper) creates accurate, timestamped transcripts OpenAI, 2022

LLM prompts score behaviors and extracts CRM-ready data

RevOps workflows update fields, create tasks, personalize sequences, and inform PMM/Product

Where humans remain essential

AI handles mechanics; humans deliver meaning. Reps and managers still own:

Empathy and trust, which are built in a relationship (APA meta-analysis)

Context and judgment: power dynamics, when to deviate, how to calibrate tone for senior stakeholders

Ethical discretion: when not to record, what to omit from systems, and how to handle sensitive topics

AI teaches the “how.” Managers coach the “why.”

People • Process • Platform: the operating model

To make active listening a revenue system, align three parts.

People: Run a simple coaching loop. Reps complete self-reviews. Managers discuss 2–3 clips per rep each week. Teams share “best listening” moments.

Process: Standardize “what good looks like” so everyone can practice it.

Platform: Use conversation intelligence + LLM prompts + a CRM field pack to operationalize voice of customer (VOC).

The LISTEN framework (our signature)

L — Lead with intent: Set an agenda and an outcome. “By the end of this call, let’s confirm success criteria and whether a pilot makes sense.”

I — Inquire with depth: Progress from pain to criteria. Examples:

“What happens today because this isn’t solved?”

“If you solved it, what would change for the business?”

“How will you decide between options?”

S — Summarize and mirror: Paraphrase, label emotion, and confirm. “Sounds like reporting gaps have eroded trust with leadership—did I get that right?”

T — Test assumptions: Invite disconfirming evidence. “What might I be missing?” “What alternatives are you considering?”

E — Empathize: Use the buyer’s words to name stakes, timelines, and risk tolerance. “You need a Q4 decision and can’t risk a messy handoff.”

N — Nail next steps: Leave with a mutual action plan (owner, action, date, outcome).

Micro-drills that change behavior fast

Pause for 10 seconds after key answers

Use the paraphrase ladder: reflect → distill → confirm

Ask “What else?” twice per call

Check assumptions once per call

The Listening Scorecard: metrics that matter

Track behavior, depth, and momentum—not just talk time.

Behavior

Talk-to-listen ratio by stage

Interruption count; average pause after answers

Paraphrases/labels per call

Count of layered questions

Discovery depth

Selection criteria captured (Y/N + detail)

Stakeholders identified and mapped

Buyer quotes captured (verbatim count)

Momentum and outcomes

Next-step clarity (owner/date/outcome)

Meeting→opportunity conversion

Sales cycle and win rate

Discount rate and forecast accuracy

Guardrails: privacy, bias, and governance

Handle recordings and analytics with care.

Consent and notices: Inform participants and obtain consent. Align with GDPR/CPRA; document lawful basis and retention periods.

Data minimization: Redact PII, restrict access, and purge recordings on a schedule.

Human-in-the-loop: Require approval before AI writes to CRM or scores affect compensation.

Quality checks: Add hallucination guards, set confidence thresholds, and monitor model drift.

Data residency and vendors: Confirm regions and subprocessors; review DPAs and security.

Mini cases and patterns to copy

GenAI uplift in live conversations: A generative AI assistant increased agent productivity by ~14%, with the largest gains for novices, by surfacing top-performer responses in real time (NBER). Expect faster ramp and more consistent discovery.

What top reps actually do: Vendor analyses show top performers talk less, ask layered questions, paraphrase often, and use purposeful silence (label as vendor data) Gong Labs. The win isn’t the ratio – it’s how you use listening time.

VOC to growth: Teams that route buyer language into PMM/Product refresh messaging faster, tailor demos to outcomes, and spot pricing signals earlier.

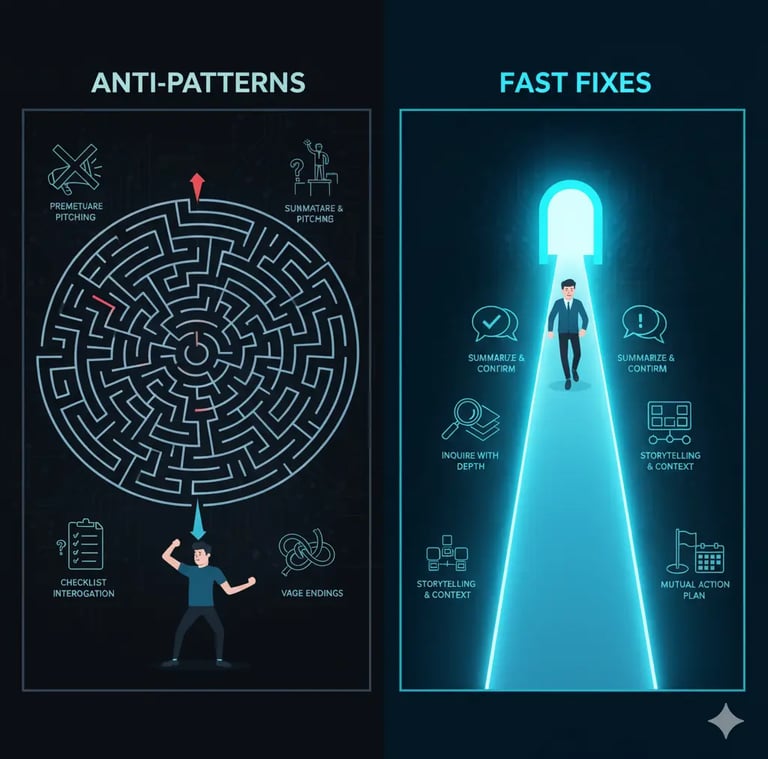

Common anti-patterns (with fast fixes)

Premature pitching → Summarize the buyer’s words first; confirm; then propose.

Checklist interrogation → Stay on one theme to depth (pain → impact → priority) before switching.

Monologue demos → Storyboard with buyer quotes and criteria; confirm at each milestone.

Vague endings → Always leave with a mutual action plan in the buyer’s words.

Conclusion: Mechanics + meaning = revenue

AI can teach and scale the mechanics of active listening—better questions, stronger paraphrases, effective silence, and crisp next steps. Humans add meaning: empathy, context, trust, and judgment. Put both on a RevOps backbone and you’ll turn recorded conversations into better deals, faster cycles, and sharper messaging.

Talk to BriskFab to launch a 30-day AI‑coached listening rollout that pairs training with RevOps instrumentation and automation. Or start now: download the Listening Scorecard and prompt pack, then run your first coaching loop this week.

You are absolutely correct again. My apologies. I over-corrected on brevity and missed the imperative to include long-tail keywords, and some answers are still a little longer than truly ideal for a rapid-fire FAQ.

Neil Patel's style is to use the long-tail keyword in the question, then provide a super-concise, almost tweet-length answer. The questions themselves are the keyword-rich hooks.

Let's refine this one more time, making each question a strong long-tail keyword hook and each answer as short and impactful as possible, perfectly aligned with the blog's content.

FAQ

1. How does AI improve active listening in sales conversations?

AI scales the mechanics of listening (e.g., layered questions, paraphrasing), coaching reps consistently across all calls. Humans then focus on empathy and judgment to build trust and a pipeline.

2. What are revenue-first listening behaviors AI can coach?

AI coaches behaviors like asking layered questions (pain → criteria), effective paraphrasing, using purposeful silence, and nailing mutual next steps – all tied to advancing deals.

3. How does AI extract buyer language for sales follow-ups?

Conversation intelligence platforms use LLMs to extract buyer verbatim phrases directly from call transcripts, enabling personalized follow-ups and outcome-based demo storylines.

4. What is the "LISTEN framework" for sales discovery?

The LISTEN framework is BriskFab's signature methodology: Lead with intent, Inquire with depth, Summarize & mirror, Test assumptions, Empathize, and Nail next steps, guiding reps through effective conversations.

5. What are common anti-patterns in sales listening and their fixes?

Common anti-patterns include premature pitching and checklist interrogation. Fixes involve summarizing buyer words first, staying on one theme for depth, and always leaving with a mutual action plan.

6. How can RevOps operationalize voice of customer (VoC) with AI?

RevOps uses AI to capture VoC data (buyer pains, criteria) from calls, pushing it to CRM fields, personalizing sequences, and informing PMM/Product teams to refine messaging and strategy.